Five things to remember when reading reports on scientific studies

Last Updated September 20, 2018 · First Published March 4, 2017

We see it over and over again: A new health study is published in a peer-reviewed medical journal, and it’s picked up by the media (thanks to the accompanying press release), summarized by reporters, and then given a provocative headline by their editors.

I recently came across a press release from the National Institutes of Health, announcing the results of a study reported in the New England Journal of Medicine that concluded that “coffee drinkers have lower risk of death.”

The researchers followed over 400,000 men and women from 1995 to 2008, aged 50-71 at the outset. They excluded people who already had cancer, heart disease, and stroke. Coffee consumption was assessed — just once! — at the start, via self-report.* They then looked at how many people died in the thirteen years that followed.

I want to use this particular study today, not to discredit it, but rather, to use it as an example of how things can get twisted and overblown in the reporting and analysis.

1. Correlation does not equal causation

Just because people drink coffee doesn’t necessarily mean it’s the coffee that helps them live longer. This study showed only a correlation. In fact, the study showed that coffee drinkers overall actually had a higher risk of death: “In age-adjusted models, the risk of death was increased among coffee drinkers.”

They then point out that coffee drinkers were more likely to smoke (why don’t we wonder if coffee caused them to smoke?) — but after they adjust for “tobacco-smoking status and other potential confounders, there was a significant inverse association between coffee consumption and mortality.”

So what’s that saying? It means they have to do some statistical analysis to mathematically try to remove smoking and other probably causes of death from the equation. (In this case, they also note that the mathematical results were similar for a subgroup of people who never actually smoked — so that’s a good indicator that their math is probably correct.)

Ultimately, though, the conclusion says this: “Whether this was a causal or associational finding cannot be determined from our data.”

So it does not mean that if you drink coffee, you’ll live longer because you drink coffee. It means that people who drink coffee happen to live longer (and it doesn’t even say how much longer). It may have nothing to do with the coffee itself — it may just be the type of person who drinks coffee is more likely to live longer, for some completely other reason.

2. Headlines can be just plain wrong

In today’s incredibly fast, not-so-detail-oriented news cycle, it’s the headline that gets the most attention. The nitty-gritty details of the articles (and studies) are often missed. Some news outlets are very careful with their words, but others tend to be a bit more alarmist — and therefore, probably more inaccurate.

Take these headlines, for example, about the coffee study:

- Coffee Drinkers Have Lower Risk of Overall Death, Study Shows (ABC News)

- Can Coffee Help You Live Longer? We Really Want To Know (NPR)

- Study: Coffee lowers risk of death (The Columbus Dispatch)

- Coffee Lowers Disease Risk: Study (The Daily Beast, which is part of Newsweek)

- Coffee-drinking lowers risk of death, big study finds (The Boston Globe)

- Coffee Shown To Reduce Risk Of Death: Study (NBC Miami)

- Coffee Reduces Death Risk (About.com)

I’d say that ABC’s headline (#1) is probably the most accurate to the study’s findings, since they say “Coffee Drinkers” instead of Coffee. NPR (#2) dodges the problem by turning it into a question. The remaining five are completely wrong interpretations of the study.

As I mentioned above, coffee was not found to causatively lower the risk of death — the study authors specifically point out there was only a correlation! But when you read those headlines, it’s hard not to think, “Great! I’ll drink more coffee so I’m less likely to die!”

3. Reporting is an interpretation

Just as the headlines can be misleading, so can be the reporting. By definition, if you’re reading an article from a news outlet, you’re reading a reporter’s interpretation of the study. Good reporting, of course, will be very careful to be factually correct. But sometimes little details can shift, ever so slightly, and when it comes to studies like this, little details can make large differences. Even in large, reputable news organizations.

As a simple example, a Los Angeles Times article says “And the link was stronger in coffee drinkers who had never smoked.” That’s sort-of correct, but not technically accurate. As I mentioned earlier, the study found that the coffee drinkers who also smoked had a higher risk of death, but when mathematically adjusted to account for the smoking, the association between coffee and death was “similar” (according to the abstract on the NEJM website).

My point is that once a study is interpreted by someone else, the details may shift, even just slightly, and in something where correlation and causation are already fuzzy, it can make a big difference in how we, the casual readers, interpret the findings.

4. Study authors and funders are often biased

I don’t think this is necessarily the case in this particular coffee study, but it bears pointing out: It’s really, really important to know who is paying for a study, and who designed and conducted it. Although ethical standards require disclosure for conflicts of interest, they don’t require recusement the way a judge needs to recuse himself or herself from a trial. The prevailing practice is that disclosure is enough — as if knowing that someone is biased is enough to eliminate the bias itself (guess what? it isn’t).

The reputable medical journals are, of course, generally good at disclosing these conflicts of interest. But oftentimes those details are buried, or omitted altogether, by the time it gets to the mainstream news.

If the authors of this coffee study owned stock in Starbucks, would that change your opinion of their findings? Probably on an intellectual level, yes. But I bet there would still be that little voice in the back of your mind that now thinks it’s a good idea to drink more coffee.

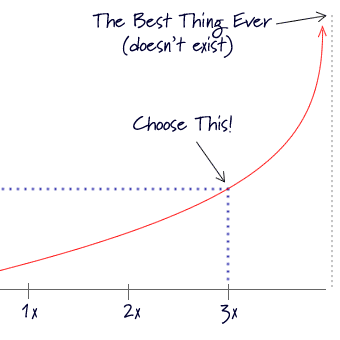

5. Percentages are incredibly misleading

This is the biggest pitfall, in my opinion, because it is so often overlooked: Studies report the amount of change as a percentage of difference from the original statistic.

In this case, the study found that “relative to men and women who did not drink coffee, those who consumed three or more cups of coffee per day had approximately a 10 percent lower risk of death.”

Okay, so coffee drinkers were 10% less likely to die. Here’s the important part: That does not mean the likelihood of dying was fully 10% less than before. What it means is that it was a 10% change from the original likelihood — not a 10% change in the the overall statistic.

In the coffee study, they tracked 229,119 men. During that time, a total of 33,731 of them died. So the overall odds of dying for a man in this group was 14.7%.

The study found a roughly 10% change in the death rates of coffee drinkers. Ten percent of 14.7% is 1.47%. So, a little bit of math reveals that the coffee drinkers had a 13.23% “chance” of dying — which is reported as a 10% improvement. But really, it’s just a 1.47% improvement overall.

So all these headlines that claim “Coffee reduces death risk”? It means that if you’re a coffee drinker aged 50-71, you have a 13.23% chance of dying over the next thirteen years instead of a 14.7% chance.

(These numbers are looking at overall totals — in reality we’d need to split it up into sub-groups, like smoking-coffee-drinkers and non-smoking-coffee-drinkers, and how many cups of coffee they drank each day… but I’m simplifying a bit to make the point.)

This may be statistically significant, and certainly is a strong enough result to warrant further research into the potential death-defying properties of coffee, but it hardly warrants bumping your daily coffee intake to four or five cups, like the headlines would lead you to believe.

So next time you’re reading about a study, keep all this in mind, and take everything with a big grain of salt.**

—

* Recall reporting is notoriously lousy; people under- or over-report almost without fail. And in this case, they asked someone to say how much coffee they currently drank — and then did not follow up on the coffee-drinking-habits over the next thirteen years. How many people quit drinking coffee? How many people increased their intake? Keeping an eye on where the data comes from should probably be it’s own item in the above list.

** But not actual salt, since that’ll raise your blood pressure and correlate to a 15.2763% greater chance of death…

Photo by Alvin Trusty, used under Creative Commons License.

Came across another perfect example of #5 today: ABC News reported on a new study that found lower rates of cancer for people who take multivitamins (vs. placebo). ABC said it’s an 8% lower risk of cancer, but that’s incredibly misleading. If you look at the actual study in JAMA, you’ll see that the net result was 18.3 incidents of cancer per 1,000 person years for placebo, versus 17 incidents with the multivitamin. A change from 18.3 to 17 is indeed 8%, but this reporting makes it sound like you’re 8% less likely to get cancer if you take multivitamin.

In reality, the study showed that taking a multivitamin resulted in 1.3 fewer incidents per 1,000 person-years. So taking a multivitamin is really shifting the odds in your favor by 0.13% – not by 8%.